Let’s take a look at this dataset. It’s related to the Co2 emission of different cars. It includes Engine size, Cylinders, Fuel Consumption and Co2 emissions for various car models. The question is: Given this dataset, can we predict the Co2 emission of a car, using another field, such as Engine size? Quite simply, yes! We can use linear regression to predict a continuous value such as Co2 Emission, by using other variables. Linear regression is the approximation of a linear model used to describe the relationship between two or more variables. In simple linear regression, there are two variables: a dependent variable and an independent variable. The key point in the linear regression is that our dependent value should be continuous and cannot be a discreet value. However, the independent variable(s) can be measured on either a categorical or continuous measurement scale.

There are two types of linear regression models. They are simple regression and multiple regression. Simple linear regression is when one independent variable is used to estimate a dependent variable. For example, predicting Co2 emission using the EngineSize variable. When more than one independent variable is present, the process is called multiple linear regression.

To understand linear regression, we can plot our variables here. We show Engine size as an independent variable and Emission as the target value that we would like to predict. A scatterplot clearly shows the relationship between variables where changes in one variable "explain" or possibly "cause" changes in the other variable. Also, it indicates that these variables are linearly related.

With linear regression, you can fit a line through the data. For instance, as the EngineSize increases, so do the emissions. With linear regression, you can model the relationship between these variables.

A good model can be used to predict what the approximate emission of each car is.

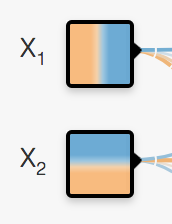

We’re going to predict the target value, y. In our case, using the independent variable, "Engine Size," represented by x1. The fit line is shown traditionally as a polynomial. In a simple regression problem (a single x), the form of the model would be θ0 +θ1 x1. In this equation, y ̂ is the dependent variable

or the predicted value, and x1 is the independent variable; θ0 and θ1 are the parameters of the line that we must adjust. θ1 is known as the "slope" or "gradient" of the fitting line and θ0 is known as the "intercept." θ0 and θ1 are also called the coefficients of the linear equation. You can interpret this equation as y ̂ being a function of x1, or y ̂ being dependent of x1. Now the questions are: "How would you draw a line through the points?" And, "How do you determine which line ‘fits best’?"

Linear regression estimates the coefficients of the line. This means we must calculate θ0 and θ1 to find the best line to ‘fit’ the data. This line would best estimate the emission of the unknown data points. Let’s see how we can find this line, or to be more precise, how we can adjust the

parameters to make the line the best fit for the data.

For a moment, let’s assume we’ve already found the best fit line for our data. Now, let’s go through all the points and check how well they align with this line. Best fit, here, means that if we have, for instance, a car with engine size x1=5.4, and actual Co2=250, its Co2 should be predicted very close to the actual value, which is y=250, based on historical data. But, if we use the fit line, or better to say, using our polynomial with known parameters to predict the Co2 emission, it will return y ̂ =340.

Now, if you compare the actual value of the emission of the car with what we predicted using our model, you will find out that we have a 90-unit error. This means our prediction line is not accurate. This error is also called the residual error. So, we can say the error is the distance from the data point to the fitted regression line. The mean of all residual errors shows how poorly the line fits with the whole dataset. Mathematically, it can be shown by the equation, mean squared error, shown as (MSE). Our objective is to find a line where the mean of all these errors is minimized. In other words, the mean error of the prediction using the fit line should be minimized. Let’s re-word it more technically. The objective of linear regression is to minimize this MSE equation and to minimize it, we should find the best parameters, θ0 and θ1. Now, the question is, how to find θ0 and θ1 in such a way that it minimizes this error? How can we find such a perfect line? Or, said another way, how should we find the best parameters for our line? Should we move the line a lot randomly and calculate the MSE value every time, and choose the minimum one?

Not really! Actually, we have two options here: Option 1 - We can use a mathematic approach. Or, Option 2 - We can use an optimization approach.

Let’s see how we can easily use a mathematic formula to find the θ0 and θ1. As mentioned before, θ0 and θ1, in the simple linear regression, are the coefficients of the fit line. We can use a simple equation to estimate these coefficients. That is, given that it’s a simple linear regression, with only 2 parameters, and knowing that θ0 and θ1 are the intercept and slope of the line, we can estimate them directly from our data. It requires that we calculate the mean of the independent and dependent or target columns, from the dataset. Notice that all of the data must be available to traverse and calculate the parameters. It can be shown that the intercept and slope can be calculated using these equations. We can start off by estimating the value for θ1. This is how you can find the slope of a line based on the data. x ̅ is the average value for the engine size in our dataset. Please consider that we have 9 rows here, row 0 to 8. First, we calculate the average of x1 and average of y. Then we plug it into the slope equation, to find θ1. The xi and yi in the equation refer to the fact that we need to repeat these calculations across all values in our dataset and i refers to the i’th value of x or y. Applying all values, we find θ1=39; it is our second parameter. It is used to calculate the first parameter, which is the intercept of the line. Now, we can plug θ1 into the line equation to find θ0. It is easily calculated that θ0=125.74. So, these are the two parameters for the line, where θ0 is also called the bias coefficient and θ1 is the coefficient for the Co2 Emission column. As a side note, you really don’t need to remember the formula for calculating these parameters, as most of the libraries used for machine learning in Python, R, and Scala can easily find these parameters for you. But it’s always good to understand how it works.

Now, we can write down the polynomial of the line. So, we know how to find the best fit for our data, and its equation. Now the question is: "How can we use it to predict the emission of a new car based on its engine size?"

After we found the parameters of the linear equation, making predictions is as simple as solving the equation for a specific set of inputs. Imagine we are predicting Co2 Emission(y) from EngineSize(x) for the Automobile in record number 9. Our linear regression model representation for this problem would be: y ̂ = θ0 + θ1 x1. Or if we map it to our dataset, it would be Co2Emission = θ0 + θ1 EngineSize. As we saw, we can find θ0, θ1 using the equations that we just talked about.

Once found, we can plug in the equation of the linear model.

For example, let’s use θ0=125 and θ1=39. So, we can rewrite the linear model as 𝐶𝑜2𝐸𝑚𝑖𝑠𝑠𝑖𝑜𝑛=125+39𝐸𝑛𝑔𝑖𝑛𝑒𝑆𝑖𝑧𝑒. Now, let’s plug in the 9th row of our dataset and calculate the Co2 Emission for a car with an EngineSize of 2.4. So Co2Emission = 125 + 39 × 2.4.

Therefore, we can predict that the Co2 Emission for this specific car would be 218.6.